Evaluating Neural Networks vs. Gradient Boosting on Regression and Classification Tasks

Project Breakdown

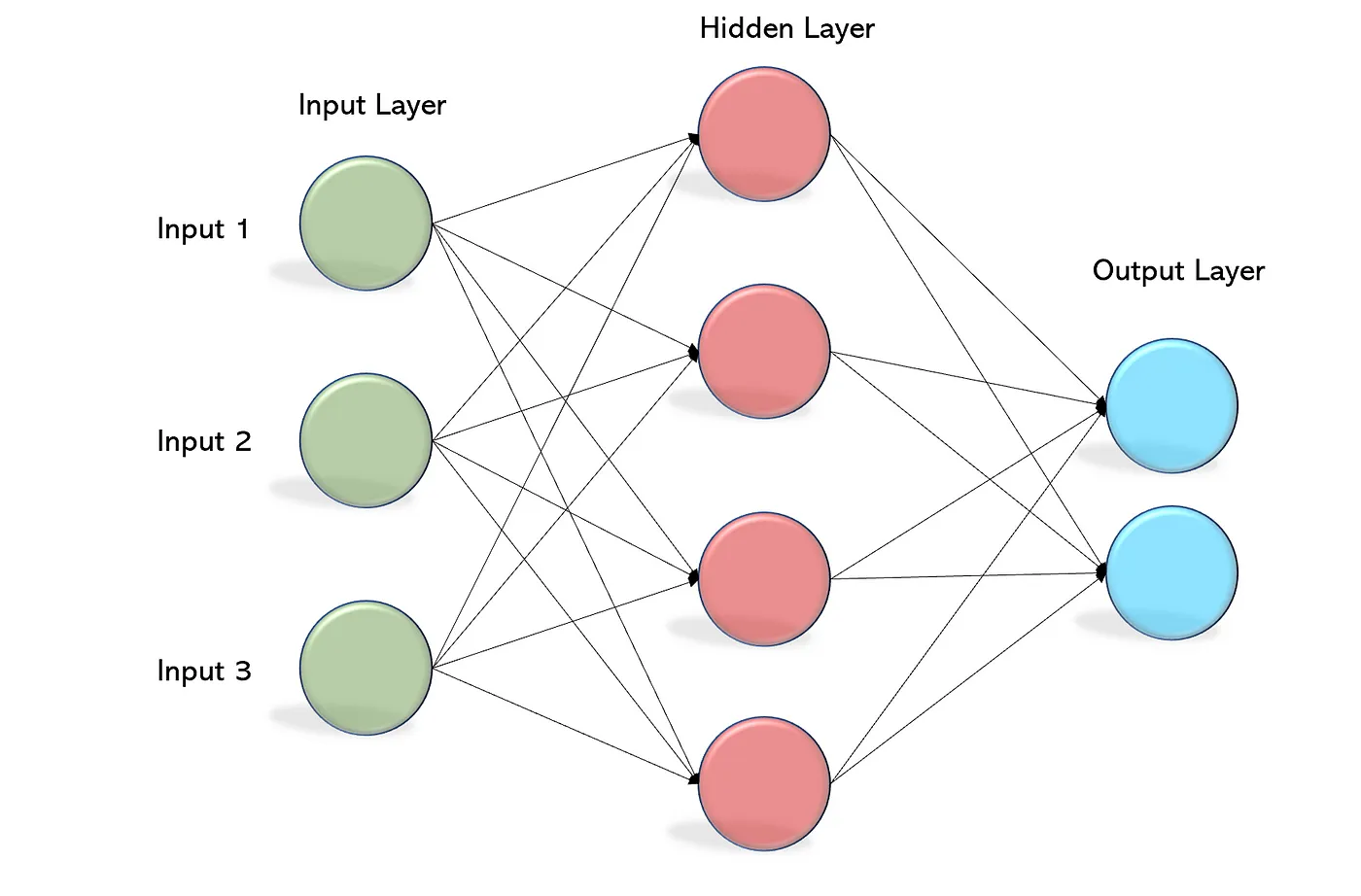

The project workflow consists of the following steps: Data Preprocessing, Building & Evaluating MLP Models, Comparing with Gradient Boosting Regressor, Data Preprocessing, Building & Evaluating MLP Models, Comparing with Gradient Boosting Classifier.

Through a series of experiments, we optimize model structures, test different MLP depths, and compare their performance against Gradient Boosting to determine the most effective approach.

Primary Goal

Evaluate MLP architectures for both regression and classification.

Secondary Goal

Compare the best Neural Networks structure with Gradient Boosting.

Optimize Performance Metrics

Use Mean Squared Error (MSE) and R² score for Regression.

Use Accuracy and Mean Squared Error (MSE) for Classification.

Key Findings

Gradient Boosting performed better in both Regression and Classification applications. Multi Layer Perceptrons (MLP) can be effective, but structured tabular data often favors Gradient Boosting methods.

Performance

Gradient Boosting Regressor outperformed MLP for regression.

Efficiency

Both MLP and Gradient Boosting performed well in classification, but GBC was slightly better.

Adaptation

Tuning deep networks is challenging, whereas Gradient Boosting adapts more efficiently.